AI Porn Deepfakes: The Consent Crisis You Haven’t Heard Of

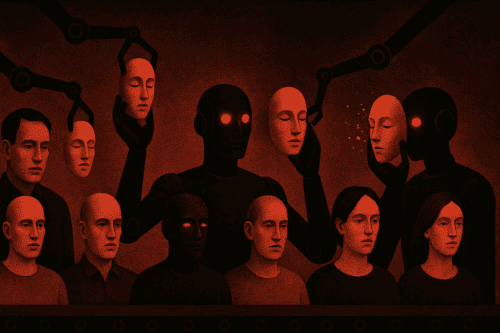

Imagine waking up to find explicit images of you spreading online—images you never made or agreed to. At that moment, you would be facing the nightmare of AI Porn deepfakes, where AI tools paste someone’s face onto sexual content or generate realistic nudes from ordinary photos without consent. In other words, it is far more than a fringe internet prank. Instead, it represents image-based sexual abuse, and furthermore, it signals a consent crisis as well as a growing threat to privacy, dignity, and ultimately personal safety.

What AI Porn Deepfakes Are

Deepfakes are synthetic media created with machine learning that can convincingly mimic a person’s face, voice, or body. More specifically, in practice, algorithms learn from a target’s photos and existing sexual footage in order to render a fake scene that looks real. For example, sometimes deepfakes perform full-body swaps; in contrast, other times they “nudify” a clothed image and transform it into a fabricated nude.

The tech has creative uses, but porn dominates. Most deepfakes online today are sexual and non-consensual. The result is a realistic illusion that convinces viewers a person did something they never did, erasing the most basic boundary: the right to control one’s sexual image.

Why This Is Sexual Violence

Non-consensual intimate imagery (NCII) covers “revenge porn,” leaks, coerced recordings, and now AI fakes. Deepfake porn fits squarely within NCII because it weaponizes someone’s likeness for sexual display without permission. The harm echoes other sexual violations: humiliation, loss of control, and fear. The lack of physical contact doesn’t reduce the injury. The violation is real because the world sees and judges a sexual image that the victim never consented to create.

Who Gets Targeted by AI Porn Deep Fakes

Women are overwhelmingly targeted. At first, early deepfakes focused mainly on actresses and streamers. Over time, however, the scope expanded, and today victims also include students, teachers, journalists, creators, and even everyday people. In fact, you can be targeted simply for existing online with public photos. Therefore, this problem isn’t limited to celebrities or influencers. On the contrary, if your face is on the internet, you are not immune. All too often, many women discover they’ve been targeted only after friends, coworkers, or family stumble upon the fake.

The Human Cost

Victims describe shock, panic, and a lasting sense of violation. Anxiety, depression, insomnia, and hypervigilance are common. Some fear going to work or school. Many delete social accounts, avoid opportunities, and worry that employers, clients, or loved ones saw the fake. Even when a victim proves it’s false, stigma can linger. That reputational harm is the point for many perpetrators: to degrade, silence, or punish.

Why Ethics and Privacy Are on the Line

Consent is the ethical bedrock of sexual expression. Deepfake porn discards consent and treats a person’s image as raw material. It also destroys traditional ideas of privacy. You no longer need a hacked nude to cause harm. A LinkedIn headshot or Instagram selfie can seed a convincing fake. When “seeing is believing” collapses, so does a person’s control over their own identity.

Claims that deepfakes are “art” or “fantasy” fail at the consent line. Free expression doesn’t extend to forging a real person into sexual content against their will. The target’s rights, autonomy, and safety take priority.

Law and Policy: Catch-Up Mode on AI Porn Deepfakes

For years, laws lagged. Many “revenge porn” statutes covered only real images, not AI fakes. That’s changing. Governments are moving to clearly criminalize creating and sharing explicit deepfakes without consent and to require fast platform takedowns. Civil remedies are expanding so victims can sue perpetrators. But gaps remain in jurisdiction, enforcement, and cross-border cases, especially when offenders operate anonymously or overseas.

Platform Responsibility

Platforms are the distribution network. Policies against non-consensual porn exist on major sites, but enforcement is uneven. Detection tools that spot deepfake artifacts are improving, and new rules increasingly require swift removals. Hash-matching services let victims submit a “fingerprint” so platforms can block reuploads. Still, many fakes spread fast, and shady sites cater to demand.

What Works: Practical Steps and Emerging Solutions

For victims and supporters, action beats despair. Here’s a concise playbook.

Immediate steps if you’re targeted

-

Document: Capture URLs, timestamps, usernames, and screenshots. Keep a log.

-

Report quickly: Use each platform’s NCII or intimate-image abuse form for faster removal.

-

Send notices: File DMCA takedowns for copyrighted photos or video frames used in the fake.

-

Search and sweep: Reverse-image search and track exact phrases from titles or captions to find duplicates.

-

Ask for help: Contact NCII hotlines and legal aid groups for template letters and support.

-

Consider counsel: A lawyer can issue preservation letters, subpoena platforms, and advise on civil claims.

Prevention and hygiene

-

Limit high-resolution public headshots. Tighten privacy settings where possible.

-

Remove geotags and personal metadata from images.

-

Use platform alerts for new mentions of your name or handle.

-

Talk to friends and colleagues about not resharing suspected fakes.

Schools and Workplaces

-

Adopt NCII policies that treat deepfakes as misconduct and harassment.

-

Provide a confidential reporting path and clear timelines for response.

-

Train staff on evidence collection, support, and non-retaliation.

Platforms and Tech Teams

-

Build AI detection into upload pipelines and search.

-

Offer a one-click “It’s me; remove this” channel with priority review.

-

Participate in hash-sharing coalitions for faster cross-platform removal.

-

Preserve logs for law enforcement while protecting victim privacy.

Lawmakers and Regulators

-

Define non-consensual deepfake sexual imagery as a specific offense.

-

Provide both criminal penalties and civil remedies.

-

Require rapid removal and penalties for noncompliant sites.

-

Enable cross-border cooperation and extradition where feasible.

-

Fund victim support, legal aid, and public education.

Adult Industry and Creators

-

Enforce verified consent for all content.

-

Publicly condemn deepfake abuse and collaborate on detection.

-

Support performer ID verification and content authenticity standards.

AI Deep Fakes – Cultural Change Matters

Technology and laws help, but culture decides how often this abuse happens. Treat resharing a suspected fake as aiding abuse. Don’t “just look.” Don’t forward. Believe victims. Make it clear in your communities—online and off—that non-consensual sexual imagery is unacceptable, whether real or AI-forged.

Resources to Know

-

Local or national NCII hotlines and legal clinics

-

Platform NCII reporting portals

-

Hash-matching services for intimate-image takedowns

-

Digital safety nonprofits offering templates, tracking tips, and emotional support

What Allies Can Do

If someone you know is targeted, focus on safety and agency. Avoid judgment. Help with reporting, documentation, and time-consuming forms. Offer to contact administrators or HR if relevant. Encourage rest, therapy, and boundaries. Respect the victim’s choices about disclosure and next steps.

The Bottom Line on AI Porn Deepfakes

AI porn exposes a hard truth: technology evolves faster than norms and laws. But the moral rule is simple. If there is no consent, it’s abuse. We can reduce the harm through smarter laws, faster moderation, better tools, and a culture that refuses to consume or spread non-consensual sexual imagery.

Consent must remain the cornerstone of intimate life and digital life alike. Indeed, protecting that principle—practically, legally, and culturally—is not only essential but also the way we move from outrage to real safety for everyone online. Ultimately, by reinforcing consent across every level, we create a pathway toward digital spaces that are safer, more respectful, and above all, grounded in human dignity.

Ultimately, by reinforcing consent across every level, we create a pathway toward digital spaces that are safer, more respectful, and above all, grounded in human dignity. To keep exploring, head back home.